We really do appreciate the feedback, hopefully we can improve on this aspect in the near future. Good luck getting your processor, if there’s any questions our product testers can help out with I’m happy to facilitate this for you.

I am also disappointed with many Choice tests including the food processor test.

Brands chosen: 5 Brevilles, 6 Sunbeams, 6 KitchenAids, not one Philips? Smith and Noble sells through Harris Scarfe, they have 3 models that look like they might be OK, not one was tested?

The 6 Kitchen Aids in particular look like variations on only 3 different models. Two of the Brevilles were almost identical with only trivial differences. Ditto two of the Sunbeams.

Durability - how about some “torture testing” to simulate a few years of hard use? Such as crushing ice over and over for a while? Deliberately overload the machine and see what happens - good machines will trip an overheat switch that can be reset(manually or automatically); lesser machines will blow a thermal fuse (requires repair); junk machines will burn out or break. try shredding frozen cabbage or grating frozen carrot (or pine wood?) for a workout of the grater blades. Look at design for durability - sturdy or fragile blade assemblies? Is there a top bearing in the lid to support slicer blade spindles? is the bearing replaceable? is it made of metal (ok), nylon (ok) or plastic (junk)? Open the machines up and assess if they are made to be repaired or throw-away. Contact manufacturers and get prices for common parts - is a replacement blade available and how expensive its it? Many of the lesser machines will have no spares available at all, which should be a reason for a fail.

I have been reading Choice since the 1970s. I remember when colour TV first came in and Choice did a really comprehensive test, including checking screens for measurable types of distortion, opening up the backs of the TVs and assessing how easy /difficult it was to work on them. Choice tests to me now seem to be more subjective, less scientifically rigorous and less comprehensive than they used to be.

I’d also like to see Choice keep old tests available in an online archive. It serves three purposes: 1. Interesting to go back and see with hindsight how Choice recommendations turned out in the long run; 2. If a certain brand isn’t included in a current test (eg: Philips food processor) I could go back and see how that brand fared in a previous test; 3. Those of us who shop second hand can see how products we are looking at now tested when they were new.

I share oscelt’s dismay at the recent food processor test.

Most of the machines I would consider from the test (I set max price $200) had poor comments from users and at productreview.com.au, which i always read in conjunction with Choice to form an overall view. The little Kambrook seems to have happy customers despite its low price, though in store it looks rather fragile.

I have been reviewing the earphone reviews by Choice and am somewhat disappointed. These products have been reviewed by a subjective assessment of 5 people where such electronic devices lend themselves to an objective analysis through computerised analysis of physical audio outputs. Peoples hearing changes throughout the day whereas the measured output is independent. Further, there is no mention of a blinded assessment, or randomisation; both easily introduced as part mitigating factors.

This is however not just confined to headphones but seems part of a wider trend of subjective assessments by Choice when more objective measures are available.

Choice should include scientific methodology where able to further their claims of independent reviews and excellence in the field of public accountability for industry.

Welcome to the community @Foxtopher.

Lab testing is a long followed audiophile practice. Is it essential in all instances?

Choice’s hands on approach to a portion of their testing provides feedback the technical comparisons do not. The manufacturer’s specifications and other online reviews likely make up for any omissions by Choice.

P.S.

I stopped worrying years ago about nuanced differences. Partly because the technical differences between quality products today are small enough to be insignificant. IE any measured difference does not necessarily translate to an appreciated difference at the ear. Power Amp and noise making box ratings excepted. Still with old vinyl and a Rega Planar 3 at source it is not all bad.

Hi, not sure if this issue has been raised already (I did do a search through previous topics here before posting) and apologies if this is repeating previous discussions.

I would like to see Choice product comparison/reviews include a category in the review relating to the “capacity to repair or fix” the product including whether the manufacturers of the product provide access to spare parts. Reluctance to add to the landfill and protection of the environment make this an increasingly important issue and there needs to be some “pushback” on manufacturers who make products that are irreparable.

Hi @catfar, I merged your comment into this compendium of other test and report comments.

You may have already found related topics such as

and there are numerous posts about repairablity (and more often lack thereof) in various product specific topics - search the Community for ‘spare parts’.

One problem is that many of us have discovered there is a difference between finding a part on a web site or in a catalogue, and holding that part in one’s hand. It can be hit or miss and parts supplies, especially once a manufacturer’s warranty period for the ‘last one sold’ expires, seem to vanish in milliseconds. As an analogy of sorts some product cycles are faster than Choice testing and publishing, so by the time a test is published we can no longer find the now discontinued or superseded models that were top rated only months ago. Many times parts are available for a cost approaching the price of a replacement product, another aspect that is individual to each product; Choice generally publishes and uses RRPs with advice to shop around, and most of us buy on sales so a part that might be 15% of RRP might be 50% of the regularly obtainable sale price, if that makes a point. All those aspects are problematic and complex to quantify.

If you have a suggestion for a methodology for reporting ‘spares’ that would be generally applicable please post what you would find acceptable and Choice might consider it, noting Choice has been grappling with this ‘ask’ for a while.

Indeed it has been raised before. In addition to the previous list it was raised in September 2018 (Suggestion(s) for the way Choice tests). Thanks for raising the issue again.

Dear Choice Community,

In the interests of reducing waste, pollution and excessive resource consumption, it would be fantastic if Choice could rate products according to their repairability as well. It would be even better is we had laws to mandate it, as France now has with respect to electronics such as laptops and smartphones.

It’s time to end current practices such as planned obsolescence, withholding of repair manuals and specialised tools and techniques, such as the use of adhesives which damage casings to prevent opening and repair. Neither we nor the planet can stand it.

What do others think and how feasible is it?

- Veronika

Welcome to the Community @mercury,

I merged your post into this existing one that is more wide ranging, but please note @catfar’s post of 9 Jan and the related topics I linked in my reply.

Therein lies the problem, It would require a significant effort and how to define what is simple, easy, hard, etc, etc as well as parts availability, costs, tools, and so on.

A comparative score such as shown in your linked article is somewhat of an arbitrary number, yet may be useful to compare products. But what it means for any individual is unknown because of parts availabilities and prices of same, special tools, and so on.

The consensus is that Choice does not have the resources to tear down every product to check, and if they did the pressures of publication times would create a problem ordering and receiving parts. Many think an index would have to rely on data from external (to Choice) sources and would be more easily gamed than the existing attempts to guide consumers, such as health star, energy, water, and similar efficiency ratings.

That being written, by the linked topics and Choice advocacy in progress I trust the point is made it is on Choice ‘radar’.

It’s a great idea, except Apple does it’s best to stop others from doing anything (repair or addition) to their beloved products using special tri-lobe screws, heat bond gluing components instead of fixing with removable/returnable components, having to completely dismantle a product to access the battery for replacement, etc.

So while one of their products may get a 6/10 for repair-ability, it is really only repairable by Apple, so is this a legitimate score? Repairability should be a measure of the difficulty to fix by anyone with the skill to undertake the work successfully.

I replace batteries in lots of non Apple and I can say that glue or double sided tape is almost ubiquitous. Spudgers and heat gun/ hair dryer is often needed. Older models often used screws but as the units get thinner and use more glass, screws are a fading item.

I have repaired quite a few Apple phones and it is getting harder and harder to repair or even replace the battery. They use some absolutely minute (tri-lobe) screws, so it is clearly feasible to utilise these tiny screws instead of using the double sided adhesive products.

The iPhone13 seems to indicate the [irrelevance] efficacy of France’s law in a practical sense, as well as suggests how hard it would be to rate repairability. Choice would struggle to replicate this effort for each phone, and more so for each product tested.

One of the main criteria Choice uses in many reviews is ‘time to do’ but that ‘time’ often gets distilled into a percent. Say the top rating (that includes the shortest time and ‘something else’) is 80% and the worst 55%.

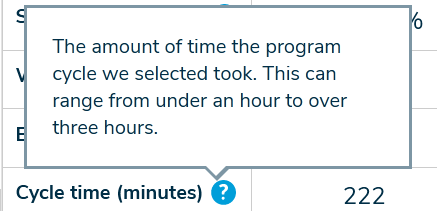

What does that mean in a practical sense? Is the difference 50 seconds, 5 minutes, 15 minutes, or 50 minutes? Would readers care about a few minutes, but as with washing machines the difference between a 1 hour and 2 hour cycle is often important and the time is noted in the tables. From the washing machine review

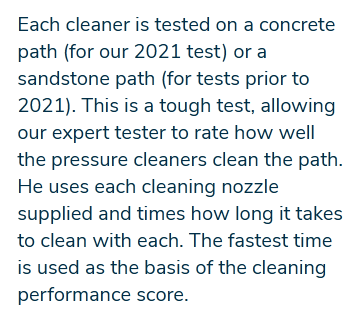

But for pressure washers as an example, from the reviews how can a reader judge whether one might do eg. the patio in an hour, one in 55 minutes, and another in 40 minutes? Distilled cleaning scores of 90% to 35% provide little insight where the raw times fall and one assumes the time recorded was the time to clean the test panel and the test panel is essentially a constant for the purposes and the cleaning in continued until the panel is ‘clean enough’ by some criteria. Even a note that the times are linear between quickest and longest percent with those times being shown would be an improvement; eh 50% takes twice as long as 100% and the fastest was 15 minutes.

To Choice credit, the sauce pan review has, drum roll… (snip of one)

![]()

Kudos. Hopefully including raw time like this will propagate to every test where time is measured ![]()

I agree with you wholeheartedly.

I believe, but haven’t looked, that this has been discussed before and there was general agreement with your sentiments.

That there was. A point is translating that to consistent application across all the tests having a time % metric but not yet including absolute times in the tables.

I see that this category has been used for feedback on the CHOICE site as well as the Community site. This is some minor feedback about the former.

I like the fact that the summary of products and their ratings in a CHOICE review includes an indication of community member reaction to each product.

However, I think it could be a little more informative. At present it only says “[n] members of the CHOICE community who reviewed this product would recommend it”, without indicating how many such reviews there were. The reader must go to the “Read what they say” link to find out if anyone has had less complimentary things to say about the product.

The uncomplimentary reviews might well be by far the majority. An example from the dishwashers review https://www.choice.com.au/home-and-living/kitchen/dishwashers/review-and-compare/dishwashers: on one product “4 members of the CHOICE community who reviewed this product would recommend it” turned out to mean that only four out of 14 would recommend it.

Would it be possible to include the total number of community reviews in the summary statement, eg “4 of 14 members of the CHOICE community who reviewed this product would recommend it”?

Hi @isopeda

I moved your post into this topic from 2017 that is still relevant. If you scan it you will notice the member comments have been called out as you suggested.

as well as some other issues.

Choice has some real problems trying to assure Member Comments on reviews are for the tested product (some are not!) and because some products have reviews and some do not they can be misleading if one is comparing a few products, some with comments and others without.

Improving the line ‘X members’ would recommend this product seems a trivial improvement to the presentation yet nada after 2years.

@BrendanMays it would be nice to see some tangible improvements that should be simple to implement, especially when some seemingly more difficult tasks such as formatting and printing comparison tables was accepted and done.