… shaped like a what? and the same size? at least it was yelling ‘obstruction’ and not offering to buy her a drink …

Delusions? ![]()

Their creator, Isaac Asimov, didn’t think so. He added a fourth one - the Zeroth Law: A robot may not harm humanity, or, by inaction, allow humanity to come to harm.

Unfortunately there is no law yet to say that the programming or constructed intelligence systems of a robot, or any automated system shall incorporate any of Asimov’s imagined future.

- There is no assurance robots and automated intelligence will be aware of such laws.

- There is no assurance the laws are secure against a failure or faulty robot.

- There is no assurance a robot or automated system will be accountable for the consequences of it’s actions.

‘WestWorld’ the movie offered an alternate future vision. Asimov had plenty of time subsequent to the 1973 release of the movie to reconsider the idealistic view of how AI systems and robotics might perform.

P.S.

Please excuse me wandering a little off topic here.

Perhaps if Asimov had been a Centrelink recipient?

The so called Australian Govt “Robo Debt” debacle is proof enough that Asimov’s laws of robotics have no legal standing in today’s world.

(Repeat loudly in a very Monty Python-like and Cleese pitched voice of sarcasm : “Cause No Harm! Cause no Harm! Cause No Harm! … Really?” )

The whole point of Caves of Steel was that someone could get a robot to break the three laws - and I think one of its sequels involved a planet that limited the definition of ‘human’ to its own residents, permitting robots to harm any interlopers. (A quick look elsewhere confirms that it was the Solarians, from Foundation and Earth.)

He also wrote a bunch of short stories about how the laws could go wrong. Some of them are hilarious - such as robots getting religion.

On the positive side of the ledger, Asimov’s three laws got others thinking.

I know what you are trying to say here but it is rather off target. This was not a case of AI gone wrong it was a case of very bad policy being implemented that just happened to include a computer to put it into effect. One of the consequences of automation is that your successes can be achieved faster and on a grander scale - but so can your failures. Make no mistake, the AI didn’t make this mistake, the warmware did.

The challenge is how AI interprets that definition. Is a robot serving McDonalds in fact harming people by feeding them unhealthy food?

This reminds me of a DLC mission from the video game Fallout 4. The player must defeat an army of crazed robots and stop their creator, ‘The Mechanist’. On defeating the Mechanist, she is dismayed and says she only wanted to help people. As it turns out the robots were all given the directive to improve the lives of humanity. The AI determined that humans would suffer pain anyway, so a quick death was the best way to minimise pain.

Although it’s a video game, it’s not too much of a stretch to imagine real AI coming up with similarly misguided conclusions (just look at the above resume screening example)

I agree. It is for now, that any system blessed with superior skills or just dumb preprogrammed logic is subject to human whim.

We are a long way removed from blaming automation of any process or drone or robot for any wrong doing. It is still all by human hand, but oh so convenient at times to hide behind the machine?

“Computer error”.

A positive outcome from AI.

Another positive outcome, but does the algorithm qualify as AI?

https://www.abc.net.au/news/2020-03-07/nrl-taking-the-two-penalty-goal-risk/12033614

Some might suggest that most major team sports are no longer sport at all. They are just a branded business delivering entertainments to the masses and wealth to a select few. Any aids to delivering for the business should be welcome.

For those who hold to higher ideals in sport. Should any form of AI or algorithm be accepted as an aid to in game play, or should it be banned? Adding non human aids to sport is certainly “not cricket”, and is definitely held as cheating in the sport of Chess!

Save us, AI!

It’s Google. We can trust Google. ![]()

The resulting systems will be totally controllable. Really!

It’s fine - we might not understand it, but the computers will.

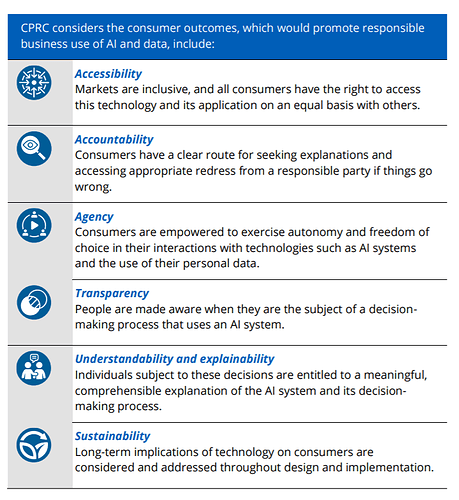

In collaboration with Gradient Institute, Consumer Policy Research Centre, CHOICE and CSIRO’s Data61, the Australian Human Rights Commission has launched a new technical paper today showing how businesses can take practical steps to address algorithmic bias in AI systems. Here are the end goals we are hoping to achieve:

[P 57, Using artificial intelligence to make decisions: Addressing the problem of algorithmic bias]

If you’d like to read the full document, download the PDF. We welcome any thoughts or response to the paper, leave a reply below.

That full document access on the page is confusingly under what appears to be the title of the “Commissioner’s foreword” on the issue, but the download is the full paper so don’t be confused by the link’s heading.

The problem to be addressed is to do with algorithmic computations. That may be software that learns and modifies its own behaviour at some level based on experience. The risk is that, without being explicitly told, it may ‘learn’ that certain classes of people ought to be targeted and that may be illegal. It is also a case of the “is -ought” problem. For example, aboriginal people are jailed more often than whites and so concludes that they ought to be jailed more often. The problem may also be badly constructed algorithms that do not involve machine learning.

We saw this confused use of ‘AI’ in the robodebt scandal. This scheme is widely described as a failure of AI. I don’t have access to the code of the specs for the software but very much doubt that the errors it produced were a consequence of machine learning. My assessment is that it was a failure of policy interpretation and that under such policy a human given the same data and pencil and paper would have performed the same erroneous calculations. It was not a result of machine learning on the dataset targeting the wrong people but a wrong policy direction. While not mentioning robodebt the foreword does say the problem may happen with social security. It is hard to imagine the authors were not familiar with the scandal.

The background section goes on to define AI in terms of machine learning and to define algorithmic bias

This paper uses the term algorithmic

bias to refer to predictions or outputs

from an AI system, where those

predictions or outputs exhibit

erroneous or unjustified differential

treatment between two groups.

So using software to implement bad policy is not in scope - just machine learning gone wrong. Which is fine, there is a broader question about unintended consequences of decisions based on machine learning. The problem that I see is that those who only read the Foreword and Exec Summary will not understand that.

Couldn’t an easy solution be to have human oversight of any decision…or as a minimum, a review/appeal process to any AI decisions.

While negative bias could potentially be caused by AI, so could favourable bias as well. An example may be an individual applies for a loan and the AI system based on previous data allows more money to be loaned that should otherwise be the case. Human oversight or checking of outcomes ensure that AI is used as a tool to support decisions, rather than making all the decisions.

The paper acknowledges already that human oversight can be part of this AI decision making. No promise of a better outcome is going to happen. The AI system learns to decide based on a seed of decisions already made by humans. This seed already introduces biases used by humans in their previous decisions.

One difficulty I see is how to remove these subtle biases or to negate them. We are not purely analytical, we make decisions based on up-bringing, our place in society, our prejudices about race, colour, religion and so on. Where one decision maker might approve another might refuse particularly when the decision is balanced on a knife edge. Using these then adds some weight one way or another. They might try to get a “fair” cross section to seed, but who determines fair? Another bias may be introduced here. As an example someone subconsciously doesn’t like the name John very much, so rejects a few more for the seeding based on the name, doesn’t need to be much but it introduces that small bias. Over time that bias could be amplified by the AI process.

Then we could have a perceived bias, ie we think the machine has reduced the prospects of one class of getting a positive decision by using “bad” data. The machine learning may have identified a problem with certain groups or group, it then weighs this risk more highly for individuals of those groups reducing the prospect of a favourable outcome. We see this weighing as a bias and so we tinker with the algorithm to introduce what we think is a better, fairer outcome and unintendedly skew the system another way.

Most of this is discussed in the paper, I don’t think it has an answer as yet.